We live in a golden age of software reuse. We’ve never before had such a wealth of freely available code, in so many languages, so easy to find and install.

And yet, we’re drowning. We slap together rickety rowboats and toss them out on PyPI Ocean and npm Sea, then act surprised when the changes flood in. We ignore the flood as long as we can, then patch the holes with duct tape and bilge pumps as if they can hold back the tide. They cannot.

It’s a wonderful, horrible problem, and I don’t know what to do about it.

Neckbeard prehistory

For a long time, the flood was just a trickle. Code reuse dates all the way back to subroutines in 1945, arguably the first instance of running the same code in more than one place. Functions in higher level languages came next, along with linkers, shared libraries, Unix pipes, and so on. Enterprise people eventually embraced and extended this with DCOM, CORBA, Java Beans, SOAP, and a host of other acronyms.

Our current golden age traces its roots back to two different ancestors: Linux and CGI. Linux distributions were some of the first projects that put together a broad range of code and made it all work together. They added installers like dpkg, yum, and apt-get, with packages on CD-ROM and later online, which led directly to our current language-specific package managers.

Likewise, web servers originally only served static files, but CGI paved the way for handling requests dynamically, with code. We quickly started making requests from code too, eventually formalized into REST and WSGI and our current world of SaaS and JSON-based APIs, where code is reused live and updated continuously. Yahoo Pipes embodied this decades ago, may it rest in peace.

The dominant form of code reuse now isn’t OS distributions or web APIs, though. If you build a web app these days, you likely start with pip install and npm install. Or composer if you’re on PHP, or gem for Ruby or crate for Rust or go get for Go or nuget for C#, and so on. Each one has well over 100k packages, often many more.

npm install everything

As an industry, we’ve jumped into this wholeheartedly. You’ve probably seen run-of-the-mill web applications with hundreds of direct dependencies, thousands if you include the transitive closure of indirect dependencies (which you should). It may feel like a lot, but it works, right? All that glorious code reuse, isn’t that the dream?

In large part, yes. There really is a vast ecosystem of reusable code now, in dozens of languages, easily discoverable, often mature enough to use in production applications. Even docs and licenses are common and standardized. The dream is alive!

It comes with a nightmare, though: how to upgrade without breaking your application. Most package managers default to installing the latest versions of packages. In some ways, this is what you want: the newest features, bug fixes, etc. Code changes over time, though, and those changes often aren’t backward compatible. If you start using foo 1.0, you’ll likely need to adapt your code to 2.0 when it comes around.

That may be ok, but if you’re always installing the latest versions, 2.0 can appear and break you at any time. Fortunately, we know how to prevent this: pin versions. If you develop against version 1.0, put exactly that version in your requirements or lock file, eg foo==1.0 in requirements.txt. This tells pip to always install 1.0. Most package managers support this, some even do it automatically.

We now know that we’ll always get 1.0, which helps, but 2.0 was released for a reason. Features were added, bugs fixed, security holes patched. Those are all good. We want those things.

So, we upgrade. We regularly check each dependency for new releases, read the changelogs and maybe even the diffs, upgrade each one in isolation, thoroughly test our application, and then carefully deploy and watch for any problems.

This is fine

Who am I kidding? No we don’t. We have thousands of dependencies, and anyway, we have “real work” to do. We put off upgrading as long as possible, until the the bugs become intolerable and we’re embarrassed to tell recruiting candidates and our CISO is breathing down our neck about a vulnerability. Then we upgrade everything all at once, dutifully poke at it on staging, fumble through fixing the most obvious breakages, and when it seems ok, throw it over the wall to production and try to forget about the whole episode.

We did this for a while – many of us still do – but it’s not great. The new hotness is to apply the agile maxims of continuous integration and if it hurts, do it more often and upgrade more frequently. If you upgrade each new dependency release as it happens, in isolation, it’s easier to identify and fix breakages. You don’t have to guess which upgrade broke you; there’s only one suspect.

Plus, when lazy engineers have to do anything often enough, they’re motivated to automate, and the industry is happy to oblige with new tools. GitHub’s Dependabot is probably the best known example, along with Renovate, Depfu, Snyk, and others. These tools parse your requirements files, watch for new releases, and automatically send PRs that upgrade them.

Millennium Edition

This is great, but it’s only one side of the coin. The other side is compatibility. How do we know when we need to change our own code to handle a dependency upgrade?

Some versioning schemes try to tell us. The standard MAJOR.MINOR.PATCH format was popularized by commercial software, which incremented major versions on a schedule, or based on organizational roadmaps, or whenever they needed to sell more units. Open source originally followed this, incrementing versions whenever changes seemed “big enough.”

More recent versioning schemes, on the other hand, tackle the compatibility question head on. SemVer requires updating the major version whenever a change is backward incompatible, aka breaking. CalVer derives versions from calendar dates for easier comparison. More exotic systems combine both, or forbid breaking changes altogether, or turn versions into a joke, or give up entirely and abdicate to Linux distributions or content-addressable hashes.

These can help, sometimes, but they’re not guarantees. It’s not always easy to know which versioning scheme a given package follows, if any. Projects occasionally switch from one scheme to another. Backward compatibility can be subtle; it’s easy to introduce breaking changes in a minor or patch version accidentally. Other times, a project may carefully follow semver, and bump the major version for a breaking change, but in a feature you don’t use. Or you use something the developers consider private and not part of the official API, and therefore not subject to versioning guarantees at all. Oops.

It worked on my machine

We have accepted wisdom for handling this: automated testing. We floss our teeth, we change the oil in our cars (for now), and we write tests and run them in CI. Tests are aimed primarily at our own code, but if we upgrade a dependency, and the tests all pass, it’s likely to be safe too.

Of course, in reality, no one has perfect test coverage, so we combine tests with other safeguards like code review and manual QA. After a developer finishes a PR, they send it to another developer, who reads it and tries to determines if anything is wrong or missing. Similarly, developers often try out their changes manually to make sure they work in practice. These additional layers only catch some issues, not all, but together they add up.

Dependencies are different than your own code, though. You didn’t write them, you don’t know their codebases, and they’re orders of magnitude bigger, cumulatively, than your app. You may occasionally dive into their source to debug an issue, or to see how something works, but not often. You’re not going to read the code changes in every new version, and if you did, it would take extraordinary effort to understand them all.

Manual testing isn’t much better. When you deploy your own changes, you generally know how to check that they’re working. When a direct dependency changes, you may know roughly where it’s being used, but only roughly, and it’s not always easy to know how a new release will affect you. Indirect dependencies, you may not know at all.

If we waved a magic wand and gave ourselves perfect test coverage, would that do the trick? Maybe. By definition, our tests would exercise all code paths, including dependencies. That’s quite a magic wand though. Automated testing has grown massively over the last couple generations and replaced wide swathes of human QA, but UI and configuration and data and many other features still stubbornly resist testing.

So, perfect test coverage is a pipe dream, dependency changes are difficult to review or test manually, and the ease of modern code reuse has swelled a trickle of upgrades into a flood. We’re drowning.

These 10 weird tricks

To recap, the current best practices seem to be:

- Pin all dependencies…unless you’re a library, then pin as little as possible. Don’t think too hard about the difference

- Write lots of tests

- Use a tool to generate upgrade PRs

- When you get one:

- If it’s a new major version, check the release notes for breaking changes. Otherwise, roll the dice, hope you feel lucky

- Update your code…maybe?

- Read the source diffs for the new ver…no you won’t, who am I kidding

- If your tests fail, find and fix the breakages

- Guess what to poke at manually

- Repeat until everything works

- Merge the upgrade

- Deploy early and often

- Monitor for breakages, fix them as soon as you (read: your users) notice

Upgrade broke your app in production? Tough luck; debug it and fix it. Calver or other non-semver? You’re on your own. Interested in a new feature? Whoa there, we’re not actually trying to improve anything here, just keep our heads above water.

More importantly, remember those thousands of dependencies? Release schedules vary, but even if we conservatively assume just a few releases per year on average, add those up and you’re looking at a dozen dependency upgrades every business day. I recently pinned all dependencies and enabled weekly Dependabot PRs on a handful of side projects, and I now get 10-20 PRs in my inbox every Monday. Ugh.

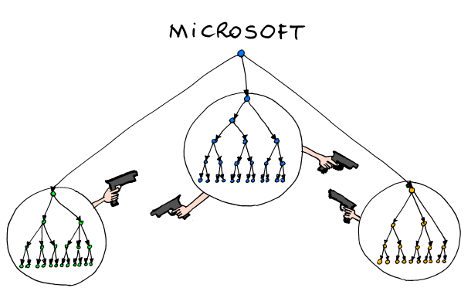

Apart from burying your head in the sand, are there any alternatives? Well…kind of. Many older big tech companies – Google, Microsoft, IBM, Apple – famously avoided external dependencies altogether. They used a few, like Linux and SQLite, but not many, and often vendored them into their internal codebases and treated them similarly to internal code. This is pejoratively referred to as NIH syndrome, for good reason, and it avoids the upgrade flood.

Naturally, it also means that they develop more of their own code instead, which they then reuse across teams internally. Those teams eventually end up with the same problem: how to upgrade their internal dependencies. The difference is, as centralized organizations, they have more tools at their disposal to drive and manage those upgrades. They can enforce standardized versioning, upgrade codebases in bulk, monitor tests across projects, etc.

For the rest of us, this isn’t really feasible. A big company can afford to build everything in house when that house has tens of thousands of engineers, but most places don’t have that luxury. Reusing external code is a huge advantage, and when everyone does it, it’s also a competitive necessity.

Interestingly, platforms like GitHub now have enough scale to play some of the same tricks. Dependabot compatibility scores are a clever example: count the repos with CI runs that passed or failed on a given upgrade, then include that fraction as a warning label on those upgrade PRs. This still feels like duct tape though; new and improved, maybe, but still fundamentally inadequate.

This is water

So we’re drowning, and I don’t know what to do about it. People agree, but they seem surprisingly unconcerned. They have bigger problems, or they think Dependabot and friends will solve it, or they just can’t be bothered. Security people are sounding alarms about typosquatting and dependency confusion and supply chain security, but that’s a different conversation. The occasional log4j cannonball blast now and then gets everyone’s attention, but our rickety little rowboats all have thousands of dependency pinholes, and we’re all slowly sinking.

If you’re staying afloat somehow, please let me know, I’d love to check out your boat. In the meantime, pardon me, I have a fresh batch of Dependabot PRs in my inbox, and they’re not going to review themselves.

Matthew Childs / Reuters

Matthew Childs / Reuters

Randall Munroe / XKCD

Randall Munroe / XKCD

All About Windows Phone

All About Windows Phone

Fox / The Simpsons

Fox / The Simpsons

Manu Cornet

Manu Cornet

I’m trying out a CI workflow that auto-merges Dependabot PRs for patch and minor version upgrades if CI passes. More duct tape, not the fundamental rethink we probably still need here, but it could help. Will see.

Re the average project’s number of dependencies, a stat from GitHub:

From Introducing Open Source Insights data in BigQuery to help secure software supply chains:

Ultimately we need something like a credit bureau or Underwriters Laboratories for dependencies. It could be decentralized with some sort of trust metric (I’d love it if people like @taviso could make a living from code reviews). As you point out, it is a huge amount of work that needs a sustainable economic model to be funded. I think insurance could be the way forward. Cybersecurity insurance premiums are exploding right now because of the adverse selection problem. If insurers required SBOMs to be certified through some attestation service, that could provide a source of funding for the necessary code reviews.

Ryan,

Love the article. Would you be open to a 15 mins conversation? I would like to talk to you more about how this is playing out in your world and what would actually help.

This is what happens with dynamically linked libraries. Ironically, Apple did it right the entire time. Get lmao’d.

nicely put. It’s hard to maintain, and higiene helps.

Pingback: Chaitanya Garud

Pingback: 科技爱好者周刊(第 236 期):中国的阳光地带 – 小东西儿

Pingback: An Internet of PHP – Timo Tijhof

Pingback: How to protect yourself from npm – Timo Tijhof

Pingback: 科技爱好者周刊(第 236 期):中国的阳光地带 - 银河