I’m a big believer in the demarcation problem: in the general case, there’s no shortcut to determining objective truth. It’s similar to the halting problem in computer science: if we want to know whether a claim is true, we have to go through the scientific method. It’s the only process we know that works.

It’s far from ideal, though. Sometimes the claim is fiendishly complex, sprawling, or only loosely defined. The scientific method can’t always come up with a simple, easy answer, especially not on the first try. Instead, it approaches the right answer, narrowing in over years or decades, with missteps and false starts along the way.

This means that policing misinformation is fraught, at best. Forget policing, we can’t even reliably identify misinformation, at least not always, or in any reasonable time frame.

This is unfair, of course. We may not be able to determine 100% objective truth every time, but we can pretty damn often. Flat earth? No it’s not. Vaccines implant 5G microchips? Come on. Lizard people from outer space? 🤦

The line is fuzzy, though. COVID was a perfect example: a brand new disease, a global public health crisis, and lots of unproven, uncertain science. Our understanding changed fast – How is it transmitted? Do masks help? Which medicine treats it? – and communicating the right answers clearly was a matter of life and death.

The Discourse ranged far and wide. On one side, scientists shared findings and compared notes with each other. On another side, public health organizations advised citizens on how to protect themselves. The boundary between the two conversations was fuzzy: if a new experiment contradicted scientists’ understanding so far, was it an important step in the scientific method? Or misinformation that would confuse and endanger the public? Or both?

Food is another great example. For decades, nutrition scientists convinced themselves that fat was bad and carbohydrates, particularly grains, were good. The FDA designed an entire food pyramid around this, taught a generation of kids to avoid olive oil and binge on white bread, and inspired food engineers to spend billions on dangerous boondoggles like Olestra.

We eventually figured out that this was backward. Many fats are good for us, and many grains and simple carbohydrates are bad, at least in large amounts. The pendulum may have swung too far backward, giving us dangerous new extremes like Atkins, but it was at least one big step closer to the truth. What if we’d suppressed or blocked everyone who questioned the “fat bad, grains good” party line? How much longer would it have taken us to come around? How many people would have suffered and died prematurely from unhealthy diets they thought were good for them?

The motivations for policing misinformation are similarly fuzzy. Sometimes it’s an easy call – drinking bleach will clearly hurt you, and won’t cure or prevent COVID – but sometimes it’s harder. The earth isn’t flat, sure, but does that mean we should take down flat earth content? Probably not. What if the leading flat earthers know it’s wrong, and they’re only doing it to fleece suckers into buying their merch? Can we know their intent? If so, should we take down their posts, or their accounts?

More egregiously, the lizard people conspiracy is clearly ridiculous, and not a direct call to violence, but it’s linked to PizzaGate, which led a crazed gunman to shoot up a family restaurant with an AR-15. Once that happened, should we have taken down PizzaGate content and accounts? How about lizard people content? Or QAnon more broadly? How about election denial, which is related? Where should we draw the lines?

In case it’s not obvious, I’m personally 100% pro science, pro shared facts, and anti conspiracy theories. Vaccines are good, they don’t implant microchips inside us, Hillary Clinton is clearly not a child molester, etc. Regardless, the debate over policing misinformation long predates the Internet, and I don’t know where all the lines should be drawn.

So, I think we should tread lightly. When the truth is clear, and misinformation directly leads to meaningful harm, ok. Probably. But maybe err on the side of tolerance. It may be a lightning rod these days, but I believe in freedom of speech, especially speech we disagree with. Or in the case of misinformation, speech we think is objectively wrong. Who knows? Someday, it might turn out that we’re the ones who are wrong.

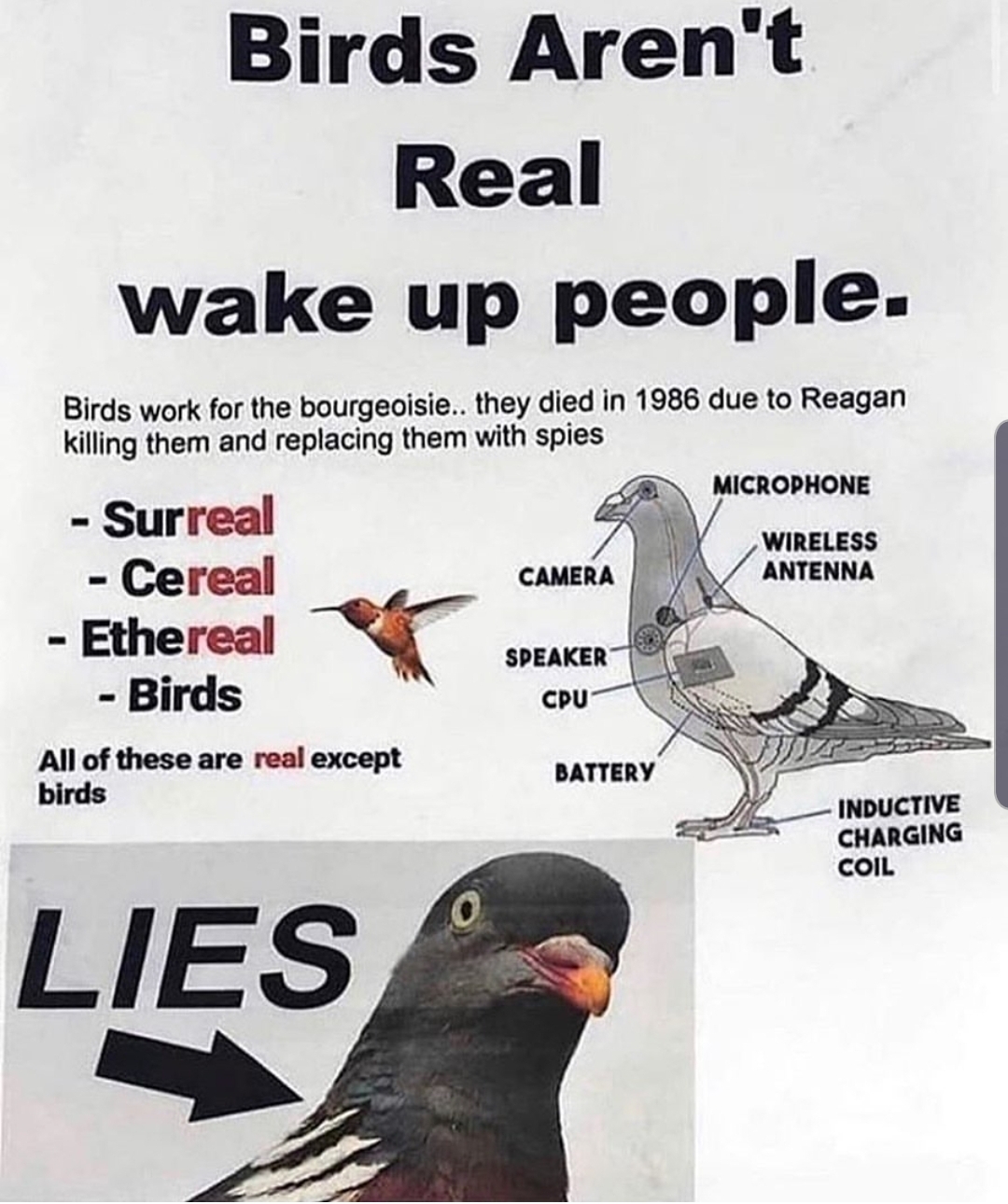

(Also, birds aren’t real. Spread the word.)

I’m highly aware of how deep and involved the major platforms’ content policies are. They’ve thought about all the specifics way more seriously than I have here, of course, and they’ve been doing it for way longer.

I’m not second guessing them on any individual issue, I’m just encouraging a light touch, and the humility that we may not be as sure of what’s right and wrong as we often think we are, even (especially!) when it comes to facts.

A friend sent this awesome infographic taxonomy of conspiracy theories and related misinformation: